RESEARCH

What we do

How is it that music moves us and bonds us so deeply? In the BEAT Lab, we are interested in the relationship between musical time and bodily time, in connections between music theory and psychology, in computational modeling of attention and motor behavior, in how music synchronizes social groups. At the broadest level, we study musical engagement to learn about fundamental dynamics of the nervous system and, conversely, we use cognitive neuroscientic methods to create more immersive musical experiences.

We are particularly interested in:

- studying dynamic attention in social contexts (e.g. multi-person mobile eye-tracking in the concert hall),

- using computational models to predict how music will alter listener's attention, motor behavior, and subjective experience over time,

- integrating our understanding of music-induced physiological changes across the nervous system (e.g., by simultaneously measuring activity from the brain, eyes, and heart to understand how these different signals from different nervous subsystems relate to one another),

- studying how behavioural and physiological dynamics change in social contexts, and whether real-time, adaptive, assistive devices can be used to help people create music together and form social bonds,

- creating more immersive musical experiences with scientific technologies.

Our research is supported by the Canadian Foundation for Innovation and the Natural Sciences and Engineering Research Council of Canada. Nos recherches sont soutenues par la Fondation canadienne pour l'innovation et le Conseil de recherches en sciences naturelles et en génie du Canada.

FACILITIES & EQUIPMENT

Where/How we do it

BEAT Lab

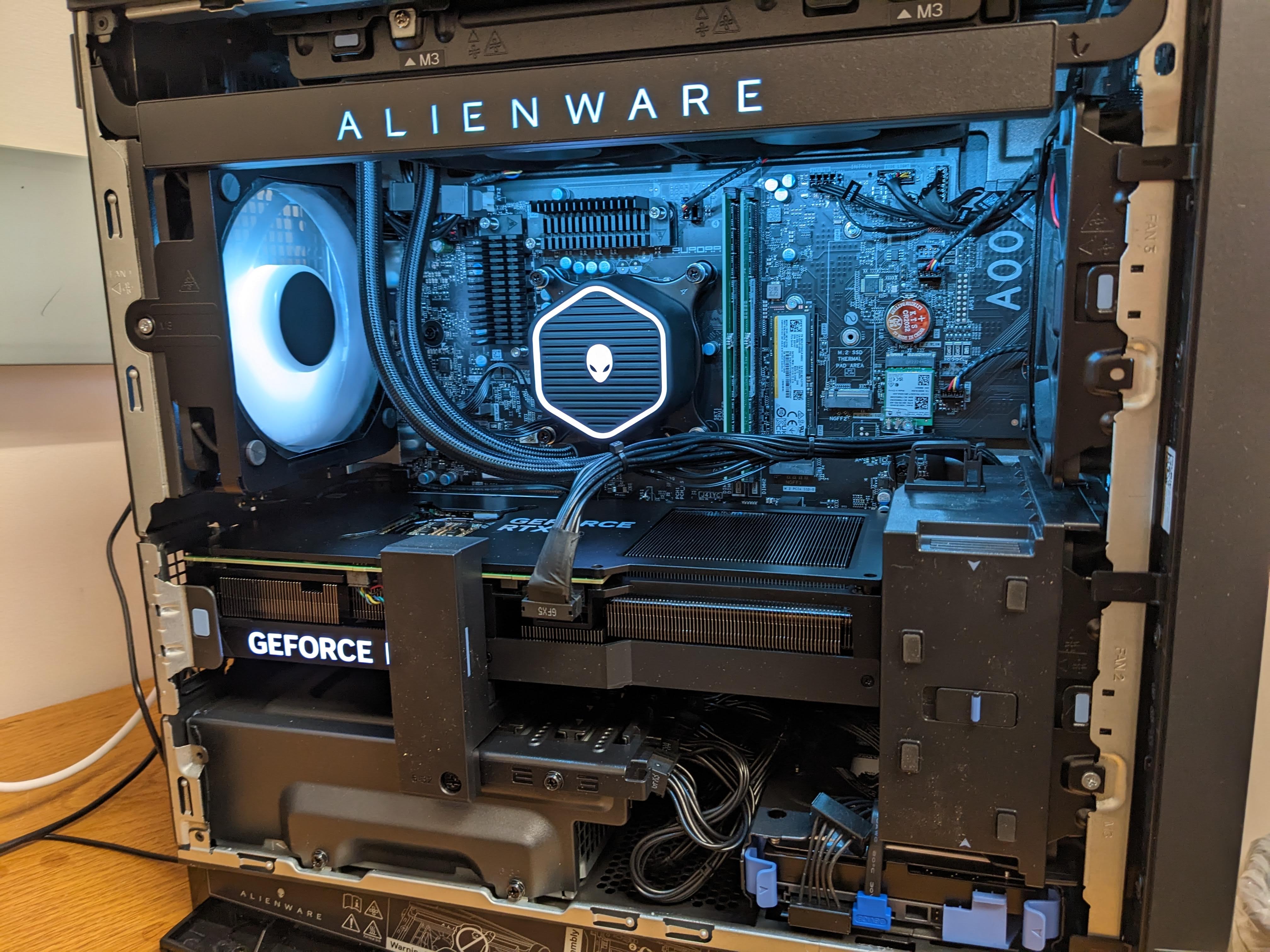

Individual and small group studies (up to 5 people) are conducted in the BEAT Lab. In the BEAT Lab, we have a range of state of the art computing and physiological recording hardware. A non-exhaustive list is provided below:

- Alienware R15 Desktop with 13th Gen Intel® Core™ i9 13900KF, 24-Core CPU, NVIDIA GeForce RTX™ 4090, 64 GB RAM. For projects requiring heavier power than this machine can offer, we have access to the Advanced Research Computing platform, SHARCNET, provided by the Digital Research Alliance of Canada.

- Mac mini M2 Pro with 12 core CPU, 19-core GPU, 16 core Neural Engine; 32GB unified memory

- 30 Pupil Labs' Neon mobile eye-tracking glasses, which record eye data, world audiovisual data, and head motion. Pupil Labs' API is open-source allowing for customizable real-time applications.

- 40 Bangle.js2 smartwatches, which record cardiac activity, motion, gps, etc. and also have a vibration motor. These watches are open-source and fully customizable.

- 5 Bela micro-controllers, which can be used for real-time, embedded audiovisual applications. Open-source and incredibly low-latency.

- 5 Arduino micro-controllers, which have a variety of potential functions. For example, we conduct group tapping studies using the previously developed open-source Groove Enhancement Machine.

- HTC Vive Focus 3 virtual reality headset with built-in eye and face-tracking.

Renovated in summer 2023, the BEAT Lab, housed in the Psychology Dept., has fresh lighting, ceilings, floors, and paint. There are six small rooms which can be used for individual offices or experimental testing rooms. One large room acts as a central meeting area with a small kitchenette, or a small group experiment environment; a second larger room is a communal workspace, control area, and music research space.

LIVELab

Large group studies, with up to 100 people in a music concert context, are conducted in McMaster's globally unique Large Interactive Virtual Environment (LIVELab). In the LIVELab, we have access to: active acoustics that can simulate any environment, electroencephalography (EEG), peripheral physiological recording equipment (e.g., to measure GSR, BVP), Yamaha Disklavier, video wall, KEMAR manikin... [click here to read more].